Copy process became longer/larger with no clear cause

Raindogtoo Jun 12 8:38PM 2018 GUI

Use case:

- Local storage located on Ubuntu server

- Backups from three clients every few hours

- Backup of the Unbuntu files once per night

- Copy from local to Wasabi storage after local back up completes

- Total chunk count is stable at a little less than 200K

- Storage size (local and cloud) is roughly 1.5TB

Observations:

- When I set up this process, the cloud copy operation would move roughly 2K chunks every evening and the job would finish in 4-5 hours.

- I've noticed that it is now copying over 6K chunks each night and often taking 10+ hours to complete

- I can't find where there's been a major change in the the volume of new/modified files on the target systems.

Issues:

- The backup/copy process is now spilling over to work hours impacting bandwidth

- I'm getting notices from my ISP about the excessive bandwidth usage

Any suggestions on how I can determine what changed and why the total number of chunks copied each evening has tripled?

gchen Jun 12 10:42PM 2018

Can you first make sure that the volume of new/modified files stay relatively constant? Did the average size of chunks change significantly?

Raindogtoo Jun 13 6:37AM 2018

How can I discern a change in avg chunk size?

gchen Jun 14 11:39AM 2018

I think one way to check the timestamps of those chunk files to figure out when they were uploaded, and then run a script to compute the average chunk size over time to see if there was a sudden change.

Raindogtoo Jun 15 9:16AM 2018

I've started some testing and realize that I'm misunderstanding some of Duplicacy's output and processes.

Let me ask two questions and then probably close this and start a new thread if I run into problems:

- With the "copy" command, an example output line is: "Chunk xxx (y/z) copied to the destination". If "y" is the chunk being copied, what does "z" represent?

- If I have two storage pools. Is there a way to reconcile them so they contain exactly the same information (chunks and snapshots)? Hopefully the answer doesn't involve deleting one of the pools because they are both roughly 1.5TB.

towerbr Jun 15 5:25PM 2018

With the "copy" command, an example output line is: "Chunk xxx (y/z) copied to the destination". If "y" is the chunk being copied, what does "z" represent?

The total chunks, see the last lines of the log:

...

INFO SNAPSHOT_COPY Chunk 900c04..........a55041 (2214/2239) copied to the destination

...

INFO SNAPSHOT_COPY Copy complete, 2239 total chunks, 184 chunks copied, 2055 skipped

gchen Jun 16 8:46PM 2018

If I have two storage pools. Is there a way to reconcile them so they contain exactly the same information (chunks and snapshots)? Hopefully the answer doesn't involve deleting one of the pools because they are both roughly 1.5TB.

If the two storages are created without encryption, then you can just merge their chunks and snapshot directories. Otherwise, there is no easy way to reconcile them, although it is technically possible. See this issue for a brief overview of steps.

Raindogtoo Jun 18 6:35AM 2018

"The total chunks, see the last lines of the log:..."

The total chunks of what? "z" is not the total chunk in the the storage repository. I found that out though trial and error.

towerbr Jun 18 7:56AM 2018

The total chunks of what?

Interesting question ... I always thought it was the total number of chunks in the storage from where it is being copied.

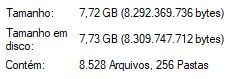

I checked into a local repository that is copied regularly to Wasabi.

Windows reports 8528 files in "chunks" folder:

The check command also reports 8528 chunks (although there should be a total in the new column too, after all is the total of this column):

pastebin version, better display of columns

Listing all chunks

All chunks referenced by snapshot [repository] at revision 1 exist

All chunks referenced by snapshot [repository] at revision 2 exist

All chunks referenced by snapshot [repository] at revision 3 exist

All chunks referenced by snapshot [repository] at revision 4 exist

All chunks referenced by snapshot [repository] at revision 5 exist

All chunks referenced by snapshot [repository] at revision 6 exist

All chunks referenced by snapshot [repository] at revision 7 exist

All chunks referenced by snapshot [repository] at revision 8 exist

All chunks referenced by snapshot [repository] at revision 9 exist

All chunks referenced by snapshot [repository] at revision 10 exist

All chunks referenced by snapshot [repository] at revision 11 exist

All chunks referenced by snapshot [repository] at revision 12 exist

All chunks referenced by snapshot [repository] at revision 13 exist

All chunks referenced by snapshot [repository] at revision 14 exist

All chunks referenced by snapshot [repository] at revision 15 exist

All chunks referenced by snapshot [repository] at revision 16 exist

All chunks referenced by snapshot [repository] at revision 17 exist

snap | rev | | files | bytes | chunks | bytes | uniq | bytes | new | bytes |

[repository] | 1 | @ 2018-05-31 00:23 -hash | 2456 | 9,185M | 7349 | 6,961M | 4 | 712K | 7349 | 6,961M |

[repository] | 2 | @ 2018-05-31 00:41 | 2407 | 9,190M | 7359 | 6,961M | 4 | 714K | 14 | 1,048K |

[repository] | 3 | @ 2018-05-31 00:48 | 2407 | 9,190M | 7369 | 6,967M | 13 | 6,045K | 15 | 6,796K |

[repository] | 4 | @ 2018-06-01 12:21 | 2406 | 9,196M | 7395 | 6,983M | 0 | 0 | 41 | 23,408K |

[repository] | 5 | @ 2018-06-03 20:44 | 2406 | 9,196M | 7395 | 6,983M | 0 | 0 | 0 | 0 |

[repository] | 6 | @ 2018-06-04 20:01 | 2407 | 9,233M | 7412 | 7,007M | 25 | 11,209K | 53 | 44,188K |

[repository] | 7 | @ 2018-06-05 20:02 | 2413 | 9,312M | 7466 | 7,065M | 23 | 8,487K | 81 | 70,258K |

[repository] | 8 | @ 2018-06-06 20:20 | 2422 | 9,346M | 7490 | 7,092M | 26 | 16,851K | 51 | 37,699K |

[repository] | 9 | @ 2018-06-07 20:12 | 2427 | 9,307M | 7482 | 7,085M | 17 | 5,797K | 366 | 379,181K |

[repository] | 10 | @ 2018-06-08 12:26 | 2430 | 9,388M | 7527 | 7,127M | 34 | 25,652K | 64 | 51,327K |

[repository] | 11 | @ 2018-06-09 22:13 | 2427 | 9,325M | 7531 | 7,113M | 23 | 8,695K | 146 | 116,753K |

[repository] | 12 | @ 2018-06-10 20:03 | 2436 | 9,321M | 7547 | 7,124M | 33 | 24,552K | 63 | 41,660K |

[repository] | 13 | @ 2018-06-10 22:46 | 2432 | 9,224M | 7507 | 7,079M | 15 | 5,313K | 47 | 36,935K |

[repository] | 14 | @ 2018-06-11 20:12 | 2432 | 9,234M | 7521 | 7,089M | 33 | 16,252K | 42 | 22,764K |

[repository] | 15 | @ 2018-06-12 20:05 | 2438 | 9,239M | 7516 | 7,086M | 28 | 8,671K | 73 | 51,075K |

[repository] | 16 | @ 2018-06-13 20:02 | 2433 | 9,244M | 7515 | 7,098M | 31 | 20,682K | 34 | 24,953K |

[repository] | 17 | @ 2018-06-17 20:32 | 2431 | 9,237M | 7500 | 7,089M | 89 | 61,525K | 89 | 61,525K |

[repository] | all | | | | 8528 | 7,908M | 8528 | 7,908M | | |

But the copy command reports 7717:

2018-06-18 09:40:30.248 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 1 already exists at the destination storage

2018-06-18 09:40:30.424 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 2 already exists at the destination storage

2018-06-18 09:40:30.607 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 3 already exists at the destination storage

2018-06-18 09:40:30.777 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 4 already exists at the destination storage

2018-06-18 09:40:30.952 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 5 already exists at the destination storage

2018-06-18 09:40:31.123 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 6 already exists at the destination storage

2018-06-18 09:40:31.302 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 7 already exists at the destination storage

2018-06-18 09:40:31.474 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 8 already exists at the destination storage

2018-06-18 09:40:31.663 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 9 already exists at the destination storage

2018-06-18 09:40:31.839 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 10 already exists at the destination storage

2018-06-18 09:40:32.019 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 11 already exists at the destination storage

2018-06-18 09:40:32.192 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 12 already exists at the destination storage

2018-06-18 09:40:32.364 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 13 already exists at the destination storage

2018-06-18 09:40:33.081 TRACE SNAPSHOT_COPY Copying snapshot [repository] at revision 14

2018-06-18 09:40:33.121 TRACE SNAPSHOT_COPY Copying snapshot [repository] at revision 15

2018-06-18 09:40:33.138 TRACE SNAPSHOT_COPY Copying snapshot [repository] at revision 16

2018-06-18 09:40:33.153 TRACE SNAPSHOT_COPY Copying snapshot [repository] at revision 17

...

2018-06-18 09:40:46.661 INFO SNAPSHOT_COPY Chunk fca6...84ef (118/7717) skipped at the destination

2018-06-18 09:40:50.837 INFO SNAPSHOT_COPY Chunk 9595...4386 (1/7717) copied to the destination

2018-06-18 09:40:50.837 INFO SNAPSHOT_COPY Chunk 642c...293f (120/7717) skipped at the destination

2018-06-18 09:40:50.837 INFO SNAPSHOT_COPY Chunk b113...0890 (121/7717) skipped at the destination

Now I'm in doubt too ... @gchen, could you clarify?

gchen Jun 18 10:26AM 2018

7717 is the number of chunks that need to be copied. It is not the total number of chunks in the storage.

towerbr Jun 18 12:51PM 2018

I'm still not sure if I understood ...

The above local storage has a total of 8528 chunks in 17 revisions. Of these, 13 have already been copied to the cloud storage:

SNAPSHOT_EXIST Snapshot [repository] at revision 1 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 2 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 3 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 4 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 5 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 6 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 7 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 8 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 9 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 10 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 11 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 12 already exists at the destination storage

SNAPSHOT_EXIST Snapshot [repository] at revision 13 already exists at the destination storage

SNAPSHOT_COPY Copying snapshot [repository] at revision 14

SNAPSHOT_COPY Copying snapshot [repository] at revision 15

SNAPSHOT_COPY Copying snapshot [repository] at revision 16

SNAPSHOT_COPY Copying snapshot [repository] at revision 17

First question: the 4 revisions not yet copied would have 7717 chunks (out of a total of 8528)? It seems to me a lot...

I copied it and the log showed:

...

SNAPSHOT_COPY Chunk bd86...e91d (7643/7717) skipped at the destination

SNAPSHOT_COPY Chunk 7bc7...37ad (7591/7717) copied to the destination

SNAPSHOT_COPY Chunk 0f8a...ca57 (7645/7717) skipped at the destination

SNAPSHOT_COPY Chunk 0ef1...ef23 (7646/7717) skipped at the destination

SNAPSHOT_COPY Chunk 5c97...8cd8 (7647/7717) skipped at the destination

SNAPSHOT_COPY Chunk 09ea...c63f (7635/7717) copied to the destination

SNAPSHOT_COPY Chunk a0cc...a77d (7649/7717) skipped at the destination

...

SNAPSHOT_COPY Chunk 13f4...1e6b (7715/7717) skipped at the destination

SNAPSHOT_COPY Chunk 6335...2525 (7716/7717) skipped at the destination

SNAPSHOT_COPY Chunk 0534...7c85 (7717/7717) skipped at the destination

SNAPSHOT_COPY Chunk f0e7...2cb6 (7648/7717) copied to the destination

SNAPSHOT_COPY Chunk afe7...fea2 (7650/7717) copied to the destination

SNAPSHOT_COPY Copy complete, 7717 total chunks, 235 chunks copied, 7482 skipped

SNAPSHOT_COPY Copied snapshot [repository] at revision 14

SNAPSHOT_COPY Copied snapshot [repository] at revision 15

SNAPSHOT_COPY Copied snapshot [repository] at revision 16

SNAPSHOT_COPY Copied snapshot [repository] at revision 17

That is, only 235 chunnks were copied, as expected, representing the most recent file changes.

So 7717 doesn't look like the number of chunks to be copied.

TheBestPessimist Jun 19 10:57AM 2018

Can it be that 7717 is the number of chunks in [all the snapshots that need to be copied]? This includes (due to deduplication) also the chunks which already exist (which are skipped as you see in the messages).

This is an example. My understanding can be wrong:

backup | chunks | newchunks:

1 | 100 | 100

2 | 160 | 60

3 | 161 | 1

do the first copy to cloud:

chunks: 161 + 160 + 100 chunks in total to be uploaded w/o taking into account already uploaded (none) | 161 are actually uploaded (rest are skipped since they're common to all snapshots)

4 | 165 | 4

5 | 120 (because you deleted something) | 10 (you also modified something and 10 chunks are new)

do the second copy to cloud:

chunks: 165 + 120 in total w/o taking into account already uploaded (161), 4+10 are actually uploaded (the rest are skipped as they already exist from before)

towerbr Jun 19 12:35PM 2018

Hi Best,

I understood your point, but it's a very creative math ... ;-)

the second copy to cloud: chunks: 165 + 120 in total

From my point of view, the total in this case should be only 120, the current total number of chunks in the repository.

4 + 10 are actually uploaded

And this (14) should be the total number "to be copied".

That simple.

But I'm still in doubt about that 7717 ...